Overview

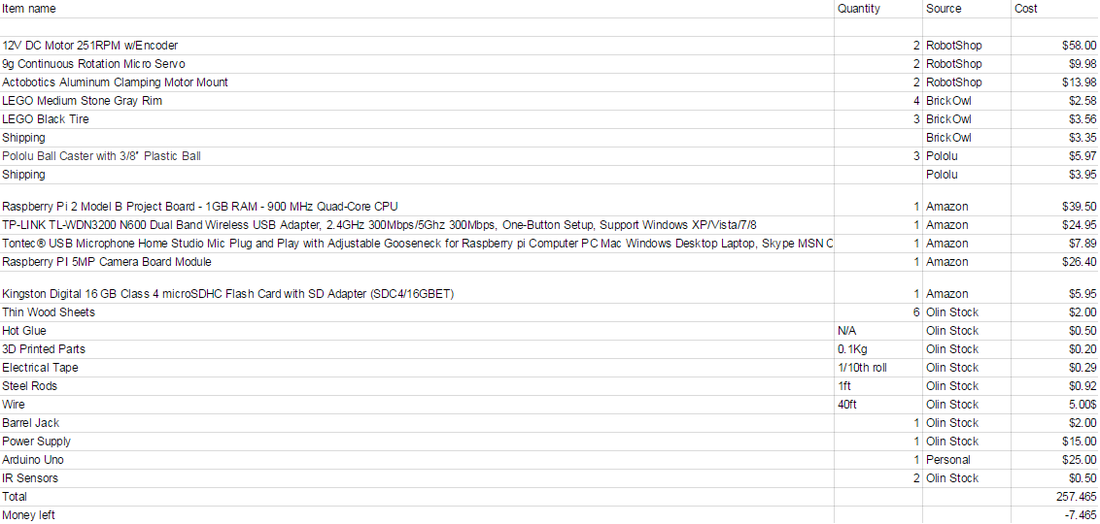

The goal of the FETCHBOT.Azimuth project was to create a robot which, at your voiced command, could delicately navigate the forest of wine glasses and fine china on your dining table to bring you dining accessories (such as the diamond salt shaker you selected from your grandmother's collection to complement your chandelier). Azimuth was created with a budget of only 250 dollars, so that we could bring this luxurious pinnacle of convenience to you at a reasonable price.

Project Goals

Ideally, Azimuth's process would be to:

-Respond to voice commands

-Navigate to a specified location

-Pick up a salt or pepper shaker

-Carry the item to a specified location

-Gently deposit the item

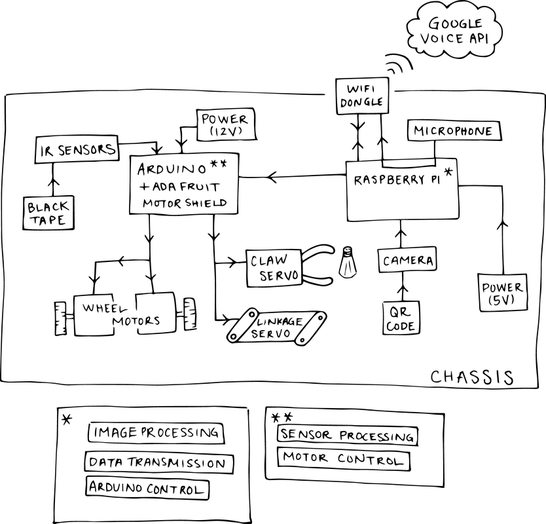

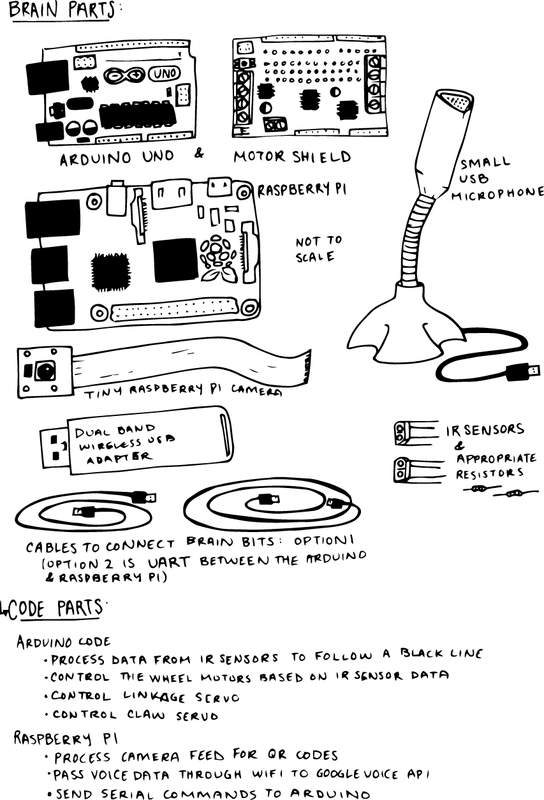

These actions would be acted out by a Raspberry Pi connected to WiFi, a camera, and a microphone, and an Arduino UNO. Azimuth would operate autonomously, with all brains and power on-board. The Arduino UNO and Raspberry Pi would work together to process images and voice to coordinate motor activation and usage.

-Respond to voice commands

-Navigate to a specified location

-Pick up a salt or pepper shaker

-Carry the item to a specified location

-Gently deposit the item

These actions would be acted out by a Raspberry Pi connected to WiFi, a camera, and a microphone, and an Arduino UNO. Azimuth would operate autonomously, with all brains and power on-board. The Arduino UNO and Raspberry Pi would work together to process images and voice to coordinate motor activation and usage.

System Diagram

Learning Goals

- Integrate a complex electrical-, mechanical-, and software-intensive system

- Forge better CAD, code, and documentation skills

- Be proud of a cooperative creative process and result

- Bring joy to the masses in the form of table-based convenience

- Forge better CAD, code, and documentation skills

- Be proud of a cooperative creative process and result

- Bring joy to the masses in the form of table-based convenience

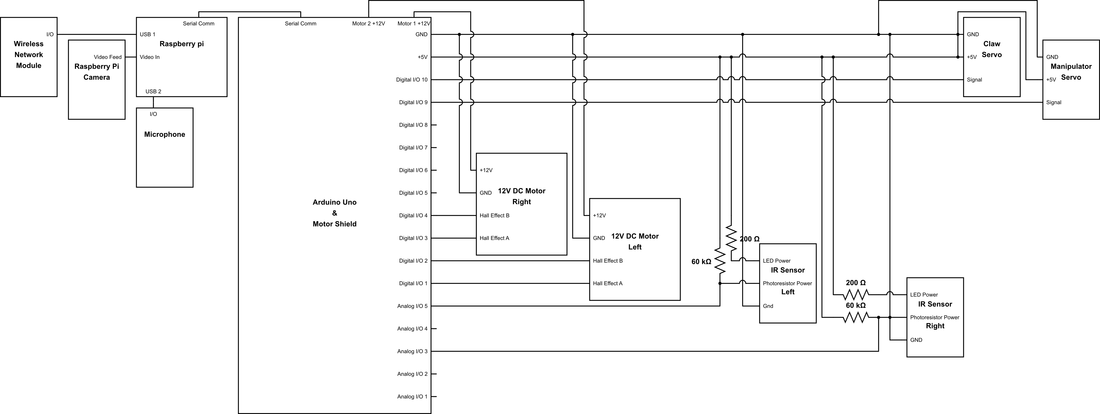

Circuit Diagram

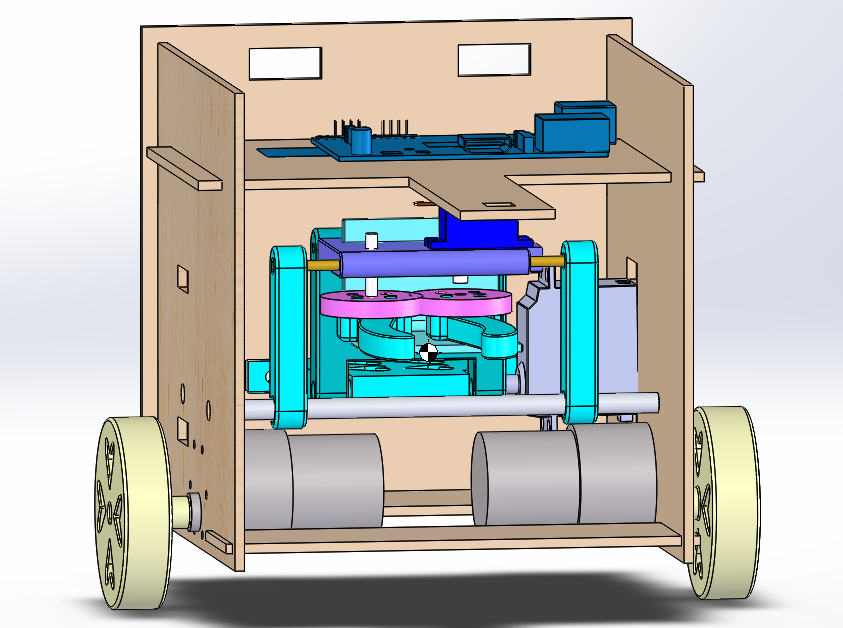

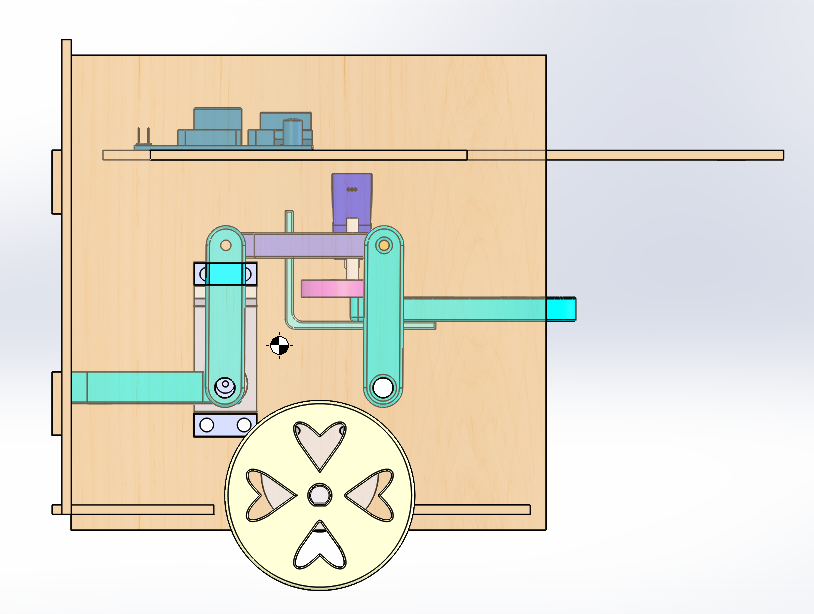

Mechanical Design

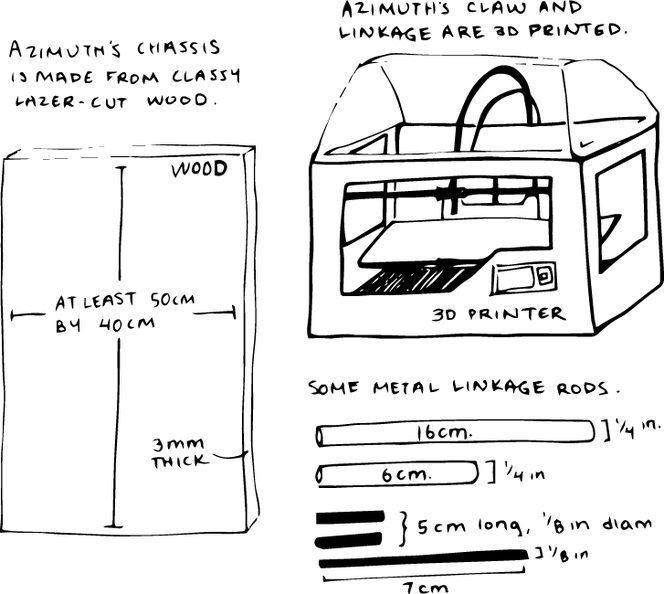

The grab-and-lift mechanism employs a parallelogram 4-bar linkage and a gentle claw, both printed in plastic. A hobby servo drives the linkage to raise and lower the claw, and a mini servo opens and closes the claw to grasp items. This mechanism was chosen because it is simple and robust, and capable of lifting and carrying a relatively heavy object.

Fabrication:

Linkage pieces, claws, and wheels were 3D printed. The chassis was laser-cut from 3mm plywood. Molded urethane was used to add gripping friction to the claws.

Click the file button below to download the CAD files and fabricate your own FetchBot chassis.

Fabrication:

Linkage pieces, claws, and wheels were 3D printed. The chassis was laser-cut from 3mm plywood. Molded urethane was used to add gripping friction to the claws.

Click the file button below to download the CAD files and fabricate your own FetchBot chassis.

| azimuth.zip | |

| File Size: | 7657 kb |

| File Type: | zip |

Software and Sensors

The FETCHBOT software is written in Python and Arduino (run on the Raspberry Pi and Arduino UNO). The Arduino receives data from two IR sensors and guides to a line using conditional thresholds in the code. It also controls the drive motors and servo motors to allow it to do complex functions such as grabbing and pivoting. The Raspberry Pi handles the more computationally intensive image processing, as well as voice to text using the Google Voice API. The camera's image feed is scanned for QR codes which tell the Pi where the robot is, and the Google Voice API converts the speech to text, which the robot parses into specific instructions for what it needs to transport and where. When it arrives at the correct location, it sends a variety of commands over a UART connection to tell the Arduino to stop, grab the condiment, then resume its path with a new target location. When it reaches the delivery site, it executes similar commands to drop the condiment off, resumes its path, and idles to wait for more instructions.

For more information, and a full lineup of required dependencies and necessary code, please visit our Github repository.

For more information, and a full lineup of required dependencies and necessary code, please visit our Github repository.