Changes and Reflection

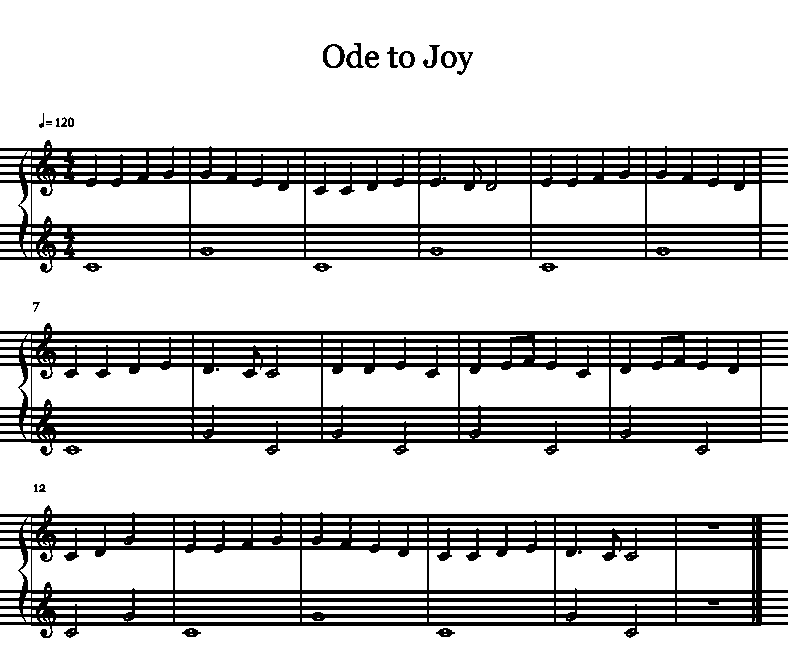

Overall, our project was well-scoped, especially for the strengths of our team. The challenges we chose to embrace were primarily software-related, which matched the interests and skills of our team. At the start of the project, we set a very realistic minimum software deliverable: we wanted to be able to use a MIDI file to control a single octave of notes. In addition, we set two stretch goals: more motors (from the software perspective, a trivial scaling problem) and the ability to use computer vision to read sheet music (a much more involved problem).

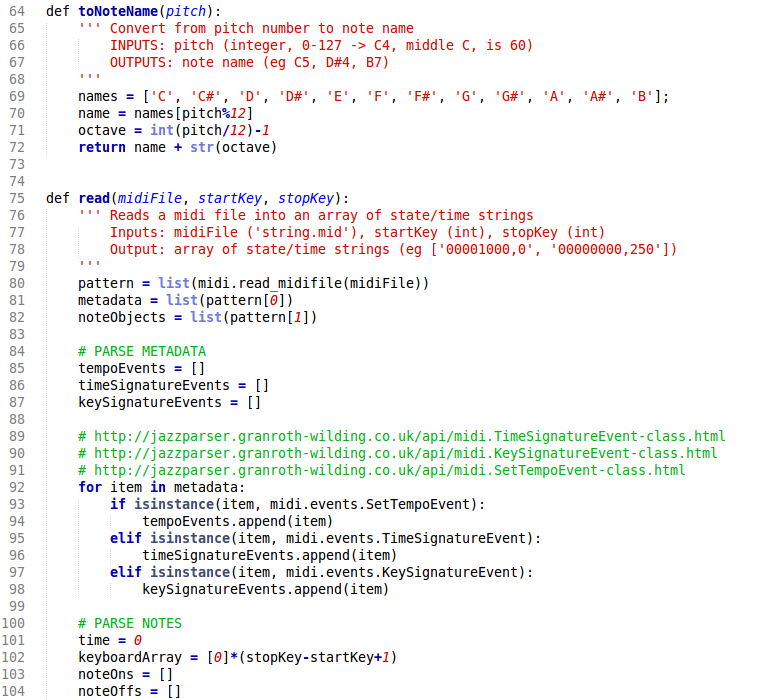

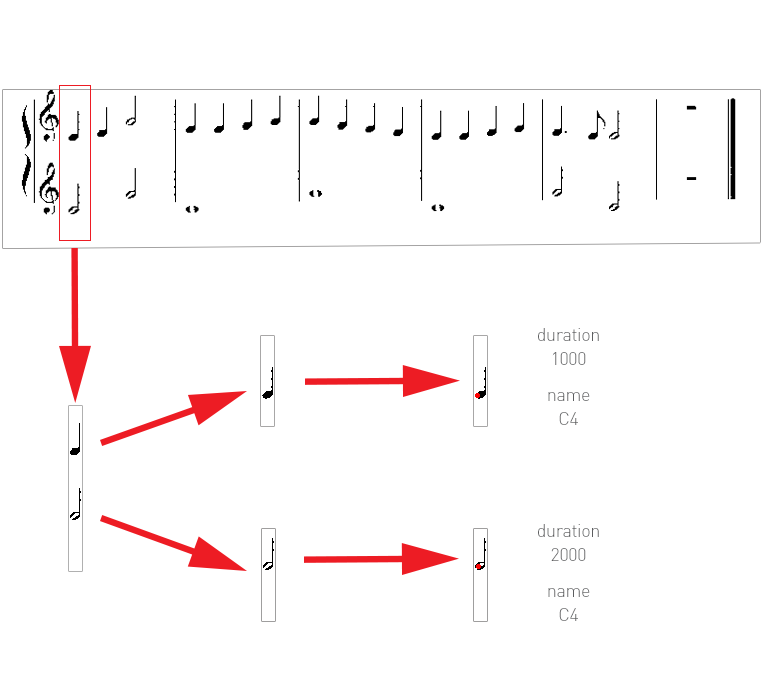

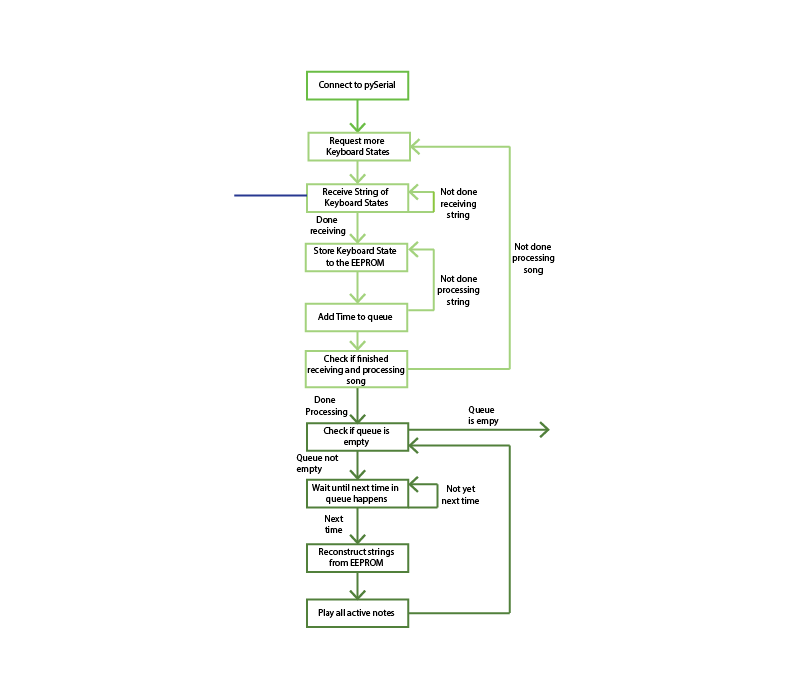

Setting such a feasible minimum goal for the software system and breaking down the problem between multiple team members allowed us to be flexible. This became unexpectedly useful when we encountered the memory limit of our Arduino (and, subsequently, the request processing time involved in our serial communication) in an early version of the serial communication. These limitations forced us to rethink our infrastructure, which opened up several learning opportunities. Working with the memory limit and around the serial delay allowed us to explore in great depth the types of memory on an Arduino and the capabilities of each, and it prompted us to think about how to encode data compactly.

We started out thinking about our system in the abstract, and as a result we built our software as several modular subsystems. This allowed us to move forward with the other subsystems individually, even when one took longer than expected. Thus, our goals remained largely the same through the project, with the minor change that at the end of the semester, we spent much of our time focusing on meeting our stretch goals.